By: Kingston Wray

Editors’ Note: This is a special edition of Token Talk written by our high school intern. He attends The Bush School in Seattle and hopes to be part of its 2027 graduating class.

My high school in Seattle has been so slow to adopt AI that we are still operating under a Grammarly ban.

This year, the admin held assemblies to explain new rules, including a three-strike policy under which students caught using AI three times could be kicked out. The approach feels extreme, especially when AI has become part of everyday life outside the classroom.

Still, I understand the intention. High school is meant to be a place where students learn how to think. If I outsourced my writing — and my thinking along with it — to a chatbot, I would miss the chance to develop fundamental skills like reading carefully, writing clearly, and forming ideas through sustained effort.

Ironically, those strict rules ended up teaching me more about the future of AI than any demo ever could. When the consequences of mistakes are high, AI needs to be disciplined, constrained, and governed.

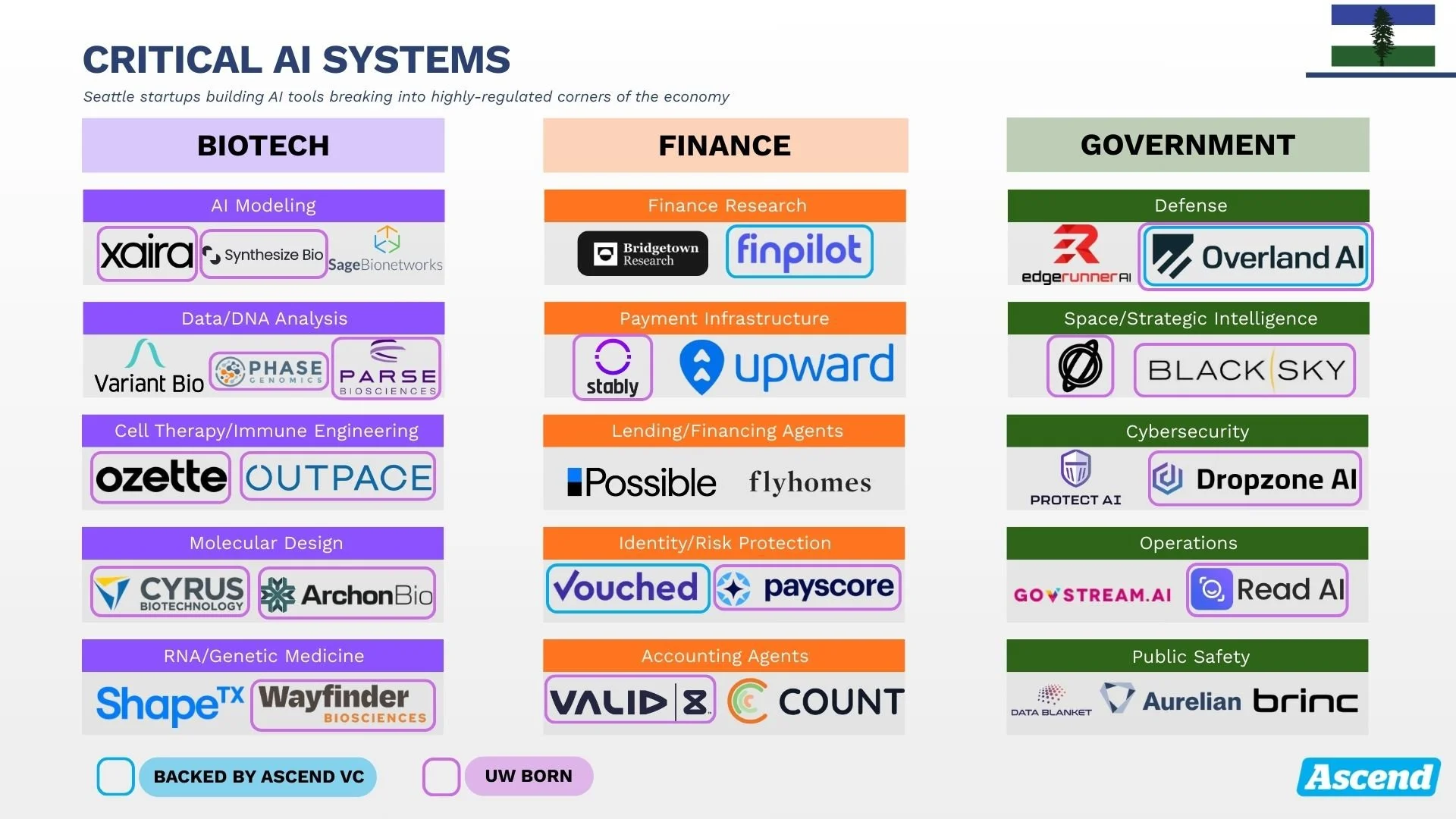

That insight shaped my internship at Ascend. Over the last three weeks, I studied Seattle startups building AI in highly regulated industries, including biotech, finance, and government, and traced where their founders came from.

In these industries, AI has to meet a much higher standard. It needs to be accurate enough to support biotech research and cell mapping, transparent enough to produce decision logs that can be audited in finance, and built from the start to comply with strict government and state regulations.

Seattle has a real advantage in this kind of work. The region brings together top research institutions, operators with experience in high-stakes environments, and proximity to government and regulated buyers. That combination produces founders who understand not only what AI can do, but where it must be precise, constrained, and accountable.

In biotech, the University of Washington plays a central role in how AI is applied to biological research and drug development. Many Seattle biotech founders come out of UW labs with deep experience using computational methods alongside experimental biology.

The core piece to this flywheel is the UW Institute for Protein Design (UW IPD), or better put, the David Baker Ecosystem.

Baker, who shared the Nobel Prize in chemistry in 2024, spent the past two decades researching protein design. He also co-founded biotech company Xaira, which uses AI to make predictions that accelerate drug discovery. The startup announced a $1 billion Seed round last year, the largest funding round ever for a Seattle-adjacent company.

Under Baker’s guidance, UW postdocs and PhD students train in labs where computational biology research is regularly translated into venture-backed startups.

Some examples include:

Cyrus Biotech, founded in 2014 by Lucas Nivon and Yifan Song, who met at UW IPD, is an AI company focused on molecular design. It raised more than $20 million in multiple financing rounds.

Parse Biosciences, founded in 2018 by Alex Rosenberg and Charles Roco, who developed the tech in the UW labs, is a data analysis biotech company. The startup raised $100 million in multiple rounds.

If UW is Seattle’s biotech founder engine, then Amazon is the region’s security-and-defense pipeline. By using the skills and experience of operating Amazon’s massive cloud infrastructure and logistics division, founders build AI that is secure and reliable at scale:

Examples include:

Protect AI, an AI security platform, was founded by Ian Swanson and Daryan Deghanpisheh, both formerly at AWS. The company raised $108 million in multiple rounds and was acquired by Palo Alto Networks last year for at least $500 million.

Brinc Drones, a company making tactical drones for emergency responders, founded by Blake Resnick, hired 15 Prime Air employees and pulled that drone expertise into the company. It raised over $150 million in multiple funding rounds.

Along with these founder factories, Seattle has institutional adjacency with the regional military presence of Joint Base Lewis-McChord (JBLM) and Naval Base Kitsap. Companies like Overland, an Ascend portfolio company, work with JBLM to test its ground autonomy vehicles.

All of that turns Seattle into a compounding loop: research trains founders, founders attract capital, capital funds more spinouts, and proximity to buyers creates a viable startup hub.

Looking forward, the most interesting opportunities sit at the intersection of AI capability and institutional constraint.

In biotech, one of the hardest problems is getting a drug to the right tissue intact. A delivery simulation tool could surface breakdown or mis-targeting early.

In FinTech, a “Financial Weather Forecast” engine could model revenue shocks, cost spikes, and demand swings to surface early warnings about cash flow and runway.

In government, a so-called “AI Inspector” could sit on top of every government AI action, checking whether use is allowed, assessing impact, testing for bias, and recording auditable logs.

Across biotech, finance, and government, the founders who succeed are the ones who learn how to earn trust. They build AI that works inside constraints, with accountability and evidence built in. Seattle has become a place where that kind of founder is trained.

Maybe one of them will take that mindset into education and build an AI system that helps students think, shows their work, and gives teachers confidence in the outcome.

When that happens, I hope my school will reconsider its Grammarly ban.

If you are building in any of these regulated industries, we’d love to hear from you.